Every major search platform now uses generative AI in its results. Sixty percent of US consumers use AI to search for information, while 26% report using it for shopping. And the landscape has expanded — as AI platforms like ChatGPT and Perplexity grow in popularity, Google is no longer the de facto destination for search (although it still holds the vast majority of traffic share.) But the writing is on the wall: if you want to get in front of customers, you now need to be found in AI-powered search.

But that begs the question: where do AI systems find the content they recommend to consumers? How do they choose what to share? And how can you influence them?

The good news is that most AI platforms rely on traditional search results from Google or Bing to inform the content they source. This means that your current SEO efforts are still deeply important, building a foundation for findability. At the same time, those platforms are actively developing competing indexes to free them from reliance on traditional search, and every day we see new capabilities emerging, like agentic commerce, that evolve how AI systems understand and share information.

For any hope of competing and winning in an AI-first world, you have to understand how and why content is sourced by AI search platforms. They’re already shaping how consumers discover and buy products. To stay visible, you must ensure your content is accessible at the source where answers begin.

The customer journey accelerates inside AI platforms, powered by intent

At the end of August, it was reported that OpenAI’s ChatGPT was using a third-party API (SerpAPI) to access scraped Google search results. This data helps ChatGPT deliver real-time answers to topical queries in areas outside its training data or OpenAI’s internal index. And OpenAI isn’t alone in its use of SerpAPI: Meta and Perplexity are reportedly customers as well.

Why does this matter? Because the customer journey increasingly unfolds inside AI interfaces owned by these companies. Consumers ask complex, conversational questions in their app of choice and receive instant recommendations without ever opening a browser tab.

That single interaction can move a consumer from discovery to decision. The customer journey is compressed to provide a satisfying answer to their need, immediately. It cuts out all the effort of discovering content, evaluating it, comparing it, verifying it, and ultimately choosing an option. AI search focuses on knowing and serving consumer intent, making it fast and simple for consumers to get the answers they want.

How AI platforms source content

Understanding how AI systems source content is the key to ensuring your brand content is accessible to them, and therefore found by consumers. And while these companies aren’t required to disclose their data sources, we have insight into many of them.

Website indexes: All findable website pages

Determines what content is findable, in both traditional and AI search. Every website page that you want consumers to visit needs to be in a website index.

AI platforms currently pull from indexes of scraped web content to both train their models and to answer queries in real-time:

- The Google and Bing indexes provide the most complete maps of the web. ChatGPT, Claude, and Perplexity access them through APIs or intermediary services (like SerpAPI).

- Proprietary indexes are being built by companies such as OpenAI, Meta, and Perplexity in order to expand each platform’s reach and establish autonomy outside traditional search. These systems aim to crawl and categorize web content directly.

Your website’s indexability determines whether or not you’re included. Pages invisible to crawlers won’t be indexed or cited for any reason, regardless of how relevant or high-quality they are. As the source of truth for all search systems, you need to be sure any content you want consumers to see can be found and indexed by crawler bots.

Model training data: Core brand knowledge

Informs knowledge about your brand: its history, values, leadership, industry / area of expertise, and other core evergreen facts.

Model training shapes the understanding that large language models (LLMs) have of your brand. Aiming for this source is playing the long game, as most models are trained on data that stops a year or more before their release date, so it captures evergreen brand information rather than current campaigns or offers.

Brands can strengthen visibility here by ensuring foundational content communicates consistent, factual details, such as company history, leadership, values, sustainability commitments, and flagship products. These elements teach the model who you are and why you matter.

When a consumer asks a question like “Which US-based apparel companies use ethical manufacturing?”, the model can leverage its training knowledge of brand attributes and long-standing commitments to provide the best answer. Make sure any stand-out variables that could differentiate and uplift your brand are represented on your website and accessible to training bots.

Retrieval-augmented generation (RAG): Up-to-date information

Helps LLMs access current information (like sales, inventory, news, and more) to ensure consumers get up-to-date, accurate responses to their queries.

It’s critical that you understand live retrieval if you want consumers to find your most up-to-date information. RAG extends model performance beyond its training cutoff date by retrieving current information from live databases, usually examining the top-ranking results and evaluating them to include in responses. Many LLMs rely on Bing’s Web Search API for this process, given Google’s reticence to share proprietary data. However, plenty of services use scraped search results to refer to Google’s top-ranked pages (as we saw with the news about SerpAPI and OpenAI.) That’s just one reason why it’s still critical to build your SEO foundations and rank well in traditional search, even as you optimize for new AI platforms.

-min.png)

RAG allows AI platforms to find and share information including (but not limited to):

- Current inventory

- Accurate pricing

- Seasonal promotions

- Current events and news

- Recent reviews

When prompted with a query looking for information on things like current product pricing, seasonal promotions, or recent news, such as “Which electric SUVs are under $50,000 right now?”, ChatGPT supplements its trained brand knowledge with fresh web data to answer. A brand known for affordable, eco-friendly vehicles will probably yield a better answer than one known for gas-powered luxury ones; consider how your core brand knowledge (the data included in trained models) reflects your ideal consumer’s journey when they’re closer to conversion.

The role of bots and content visibility

Crawler bots are the intermediaries between LLMs and your content. Different kinds of bots have different purposes, and knowing which bots are hitting your site can give you deep insight into how your content is being used. Some bots index websites, others train LLMs, and still others are used for live retrieval.

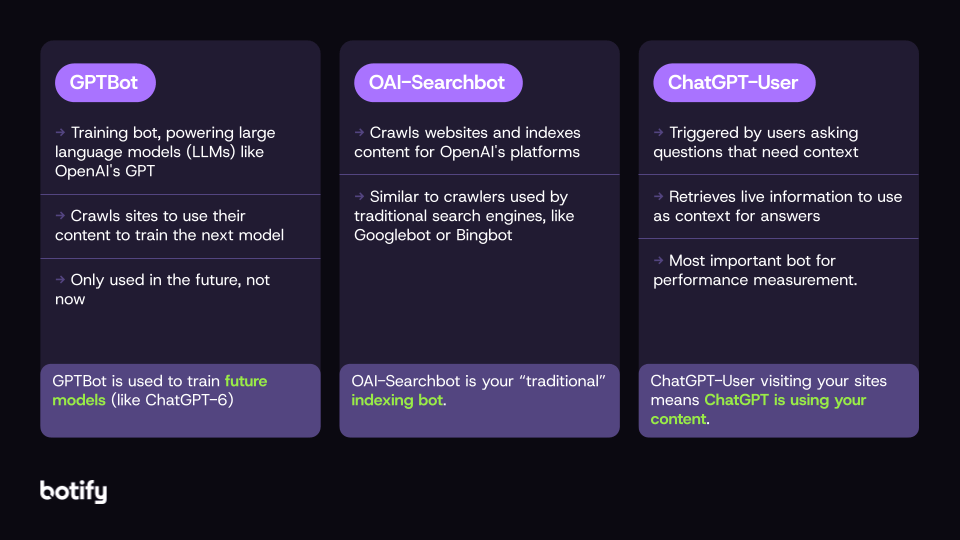

Let’s use OpenAI as an example: with multiple crawler bots, you can see how each is used and its importance in the content sourcing ecosystem.

- GPTBot crawls sites to find content to train its next model. If it’s visiting your pages, you can assume they’re being considered for a GPT version. Make sure you have rules in place to control what this bot finds to train on.

- OAI-Searchbot crawls sites to find and include their pages in OpenAI’s own search index, similar to Googlebot or Bingbot.

- ChatGPT-User crawls sites looking for fresh content to use as context for answers. It’s triggered by consumers asking questions in ChatGPT; because of this, it’s incredibly valuable as an indicator for whether your content is being used (or not.)

Analyzing your log data allows you to investigate and understand which bots are finding what content on your site. We’ve shared strategies for using log file analysis to optimize your site here.

Other ways AI platforms learn about your brand

You can control the content on your website and how bots access it, but you can’t control the rest of the internet. It’s important to remember that your brand knowledge can be shaped by outside forces as well, like:

- Review sites

- Competitor content

- Partner / co-marketing content

- Forums

- News & events

- Press releases

Anywhere your brand or products are mentioned can be fair game for training, indexing, and live retrieval. That’s why it’s critical to fold AI search as a key component into your greater marketing strategy. Consider the overall picture of how your brand shows up in the world and plan to influence your third-party presence accordingly.

How to ensure AI platforms can find and share your content

Your technical site health is your foundation for success in AI search. Before anything else, you need to be found — and the core of being found is in technical site optimization. Here are critical steps you’ll need to take:

Understand bot activity on your site

Start by understanding how various bots access your site. Identify which crawlers visit your pages, and how often they succeed in retrieving content.

Crawl logs reveal which sections of your site receive consistent attention and which may be restricted by things like robots.txt directives, authentication walls, or rendering issues. In Botify Analytics, LogAnalyzer and CustomReports can track bot behavior across Google, Bing, OpenAI, Perplexity, and many more top AI search platforms.

Build an AI governance plan

Every brand will need to establish rules around what bots can and can’t find on their websites. Shaping your governance plan for what is and isn’t accessible to specific bots allows you to get granular with your control over what they find. To learn more about building a nuanced and effective AI governance plan, read Why You Need an AI Bot Governance Plan (and How to Build One) or download our AI Search Playbook for a detailed questionnaire.

Strengthen indexability across platforms and remove technical barriers

Ensure that your critical pages are discoverable by all major crawlers and indexes. Your pages should load quickly and return consistent 200-status responses. For enterprise sites managing thousands of pages, using tools like SmartIndex can help scale indexability strategies by streamlining and automating sitemap generation and submission, reducing delays between publishing and indexation.

Structured data makes your content easier to read and understand for bots. And when consumer satisfaction depends on getting a fast, comprehensive answer, AI bots want content they can parse quickly. Using schema can help your content appear in AI-augmented features like AI Overviews while also boosting your presence in traditional search elements like rich results — a real win-win.

Don’t forget that JavaScript-heavy pages often hinder crawling and rendering. In fact, most AI bots can’t render JavaScript at all, meaning that any content relying on it could be completely invisible to them — and to consumers in AI search. With SpeedWorkers, you can serve up pre-rendered versions of your pages to help make sure all of your content is accessible to bots.

Optimize for consumer intent and update at scale

A compressed customer journey means that while consumers may not click into all of your full-funnel content, AI search platforms need to find and summarize it in order to pave the way to conversion. Content that doesn’t serve your customer journey will become more irrelevant than ever, while content that anticipates and answers your consumer’s needs is even more important. Mine your existing search data — like your Google Search Console keywords — for indications of intent, and use what you find to craft a content strategy that mirrors your consumer’s intent, desired outcome, and journey.

In addition, AI systems that rely on RAG respond quickly to updated or newly indexed pages. Publishing and refreshing time-sensitive content such as promotions, launches, or event details can help the retrieval layer detect and serve the latest information.

Content optimization can be difficult at scale, so when it comes to titles and meta descriptions, you can use SmartContent to quickly generate engaging copy rooted in your brand identity, consumer intent, and data, and then deploy those optimizations immediately site-wide.

Measure your AI search presence and iterate

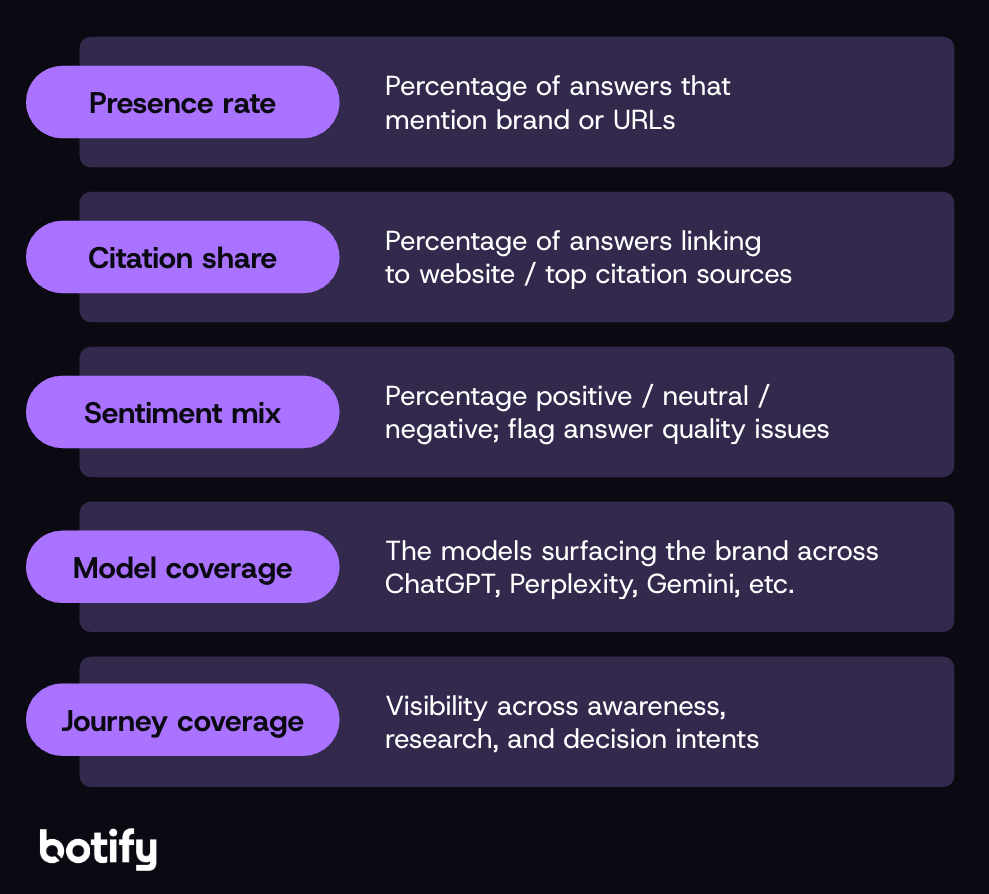

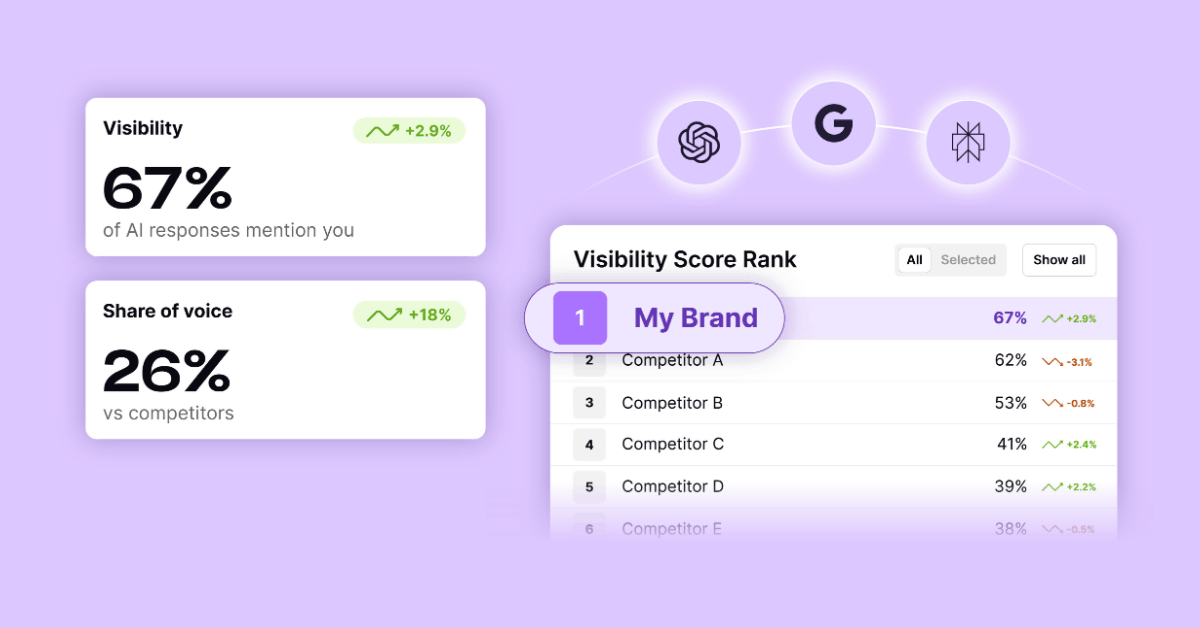

To know how to improve, you have to know where you stand. Measure your current AI visibility in terms of presence rate, citation share, sentiment mix, and model and journey coverage to illustrate your AI share of voice.

But measurement without action is just observation — so make sure you close the loop by building a plan to address and improve upon what you learn. Our newest release, AI Visibility, works with the rest of the Botify platform to reveal your true AI search performance and maximize your presence across all platforms.

Be found at the source to ensure visibility everywhere

In both AI and traditional search, your visibility drives opportunity. By understanding how and why different platforms source your content, you’re well-positioned to grow your presence as search and technology continue to evolve. Ensure your brand’s content appears wherever consumers, bots, and AI agents seek answers by controlling how you’re found and shaping the journey from there.

.svg)

.svg)

.svg)

.svg)